Storage/NFS Considerations

My homelab was in need of some more serious storage and during this exercise I gained interesting insights that I will share in this post.

Up until recently I made use of central Synology based NFSv3-storage and two locally attached PCI flash-cards for which I was restricted (drivers) to running ESX6.7 on the single physical host (HP-DL380Gen9). The fact I wanted to upgrade to vSphere8.0U3 for mac-learning reasons meant I could not use the Flash-drives anymore as local storage to host my (nested)-VM’s. Therefor I decided to reuse these flash-drives in a dedicated single physical ESX6.7 based host (HP DL380G7).

So, we now have a host and ESX-version that can run these flash-cards (4). Additionally we’ll make use of the 10Gb-NIC’s available in both hosts (X-connected both ports). The search went on for a free, good and easy tot use NAS virtual appliance. I considered Unraid(not free), TrueNAS(not stable), OpenFiler/XigmaNAS(not tested), and ended up with OpenMediaVault (and some plugins).

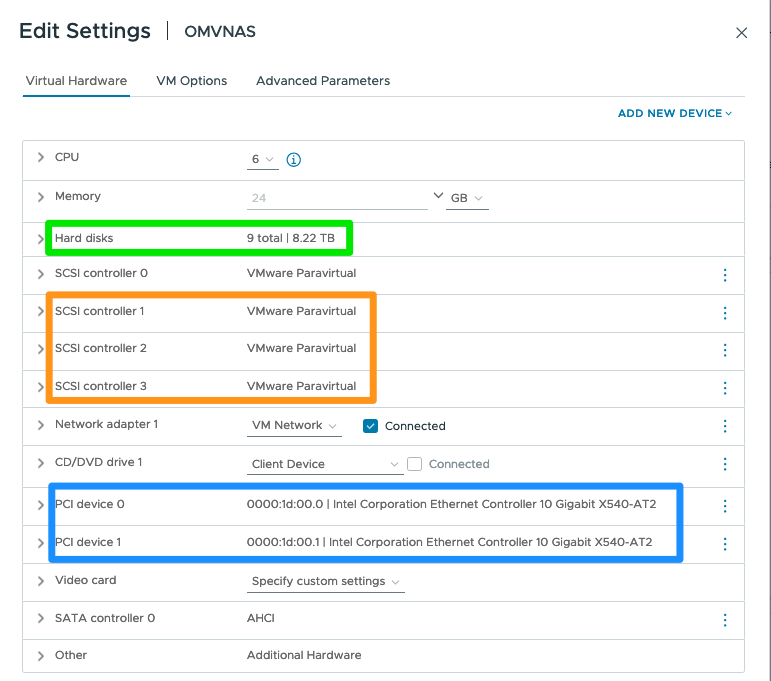

This is where it becomes interesting. How to get the most out of the available physical and virtual hardware? As far as I was concerned, reads/writes should occur simultaneously on all disks and traffic should flow over all available links. I decided to make use of multiple paravirtual scsi-controllers and pass-thru the 10Gb NIC ports. All available storage from the flash-drives is presented to the VM as a hard disk and assigned round-robin to the available scsi-controllers.

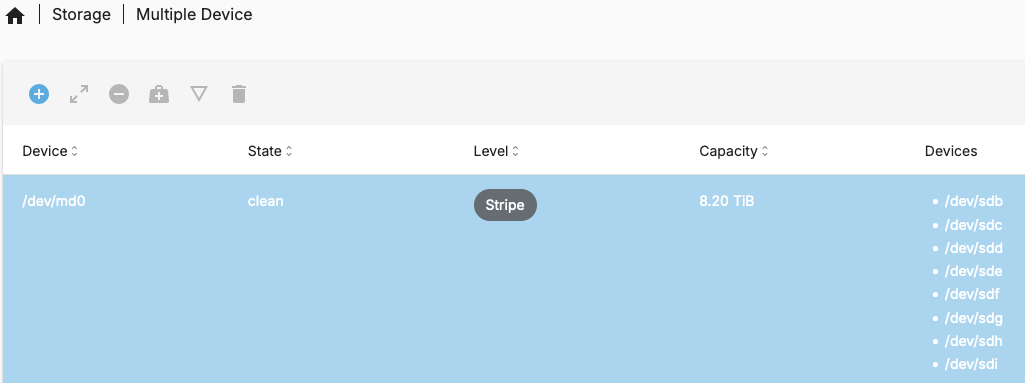

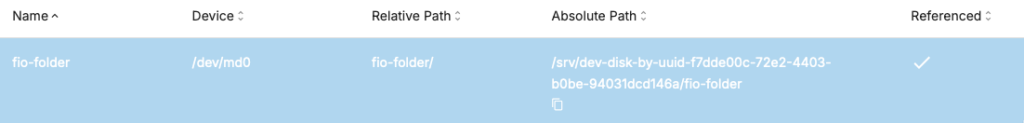

In OMV we then make use of the Multiple-device plugin to create a striped volume across the available disks.

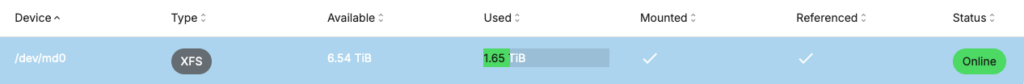

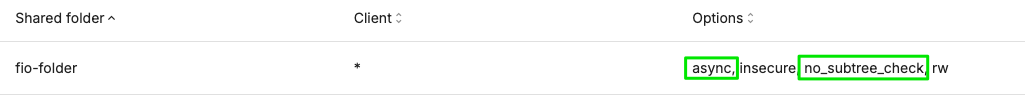

Based on this we now can build a filesystem and shared-folder that eventually will be presented as a NFS-export(v3/v4.1). After some testing it became apparent that XFS was the best suited for the virtual workloads. For NFS I decided to make use of the async/no_subtree_check options to speed things a little up.

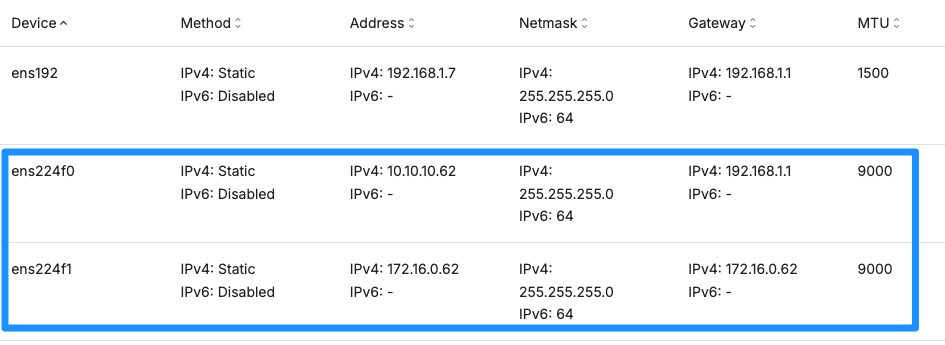

Now, on to the networking part of things where I aimed to make use of both 10Gb nic-ports (X-interconnected between the physical hosts). Therefor I created the following on OMV.

With this the NFS-server hosting part is up-and-running. For the design on client-side I wanted to make use of multiple NIC’s and vmkernel port, preferably on dedicated Netstacks. Beginning with the last, VMware has decided to deprecate the option in ESXi 8.0+ to have NFS-traffic flow over dedicated Netstacks. For this to work we previously needed to create new Netstacks and make SunRPC aware of that. In ESX 8.0+ the SunRPC commands will fail as the new implementation checks for the use of the Default Netstack.

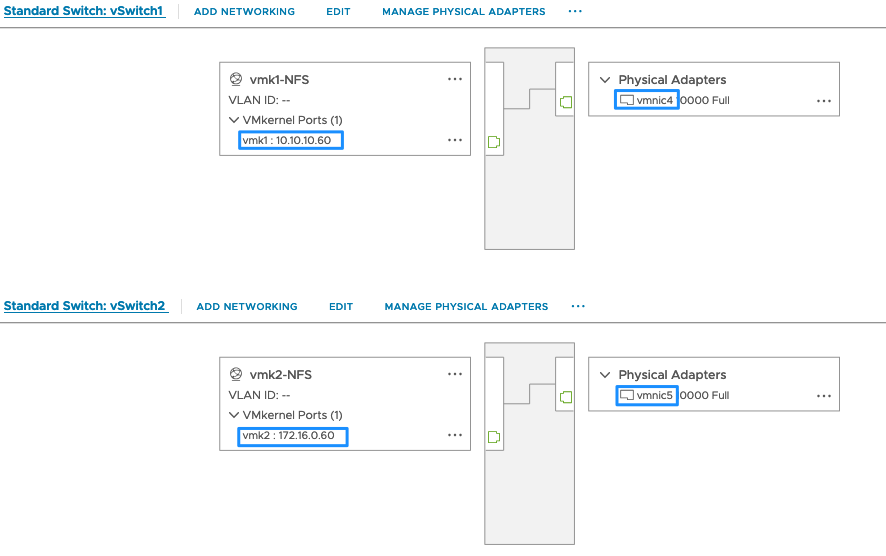

So, we are left with the NFS 4.1 options to leverage multiple connections (parallel NFS) and dedicate the traffic to vmkernel ports. But first, lets have a look at the virtual switch configuration on the NFS-client side. As seen in the below picture we created two separated paths both leveraging a dedicated vmk and it’s own physical uplink-nic.

First things to check is the connectivity between the client and server addresses. There are three ways to do this, from simple to more specific.

[root@mgmt01:~] esxcli network ip interface list

---

vmk1

Name: vmk1

MAC Address: 00:50:56:68:4c:f3

Enabled: true

Portset: vSwitch1

Portgroup: vmk1-NFS

Netstack Instance: defaultTcpipStack

VDS Name: N/A

VDS UUID: N/A

VDS Port: N/A

VDS Connection: -1

Opaque Network ID: N/A

Opaque Network Type: N/A

External ID: N/A

MTU: 9000

TSO MSS: 65535

RXDispQueue Size: 4

Port ID: 134217815

vmk2

Name: vmk2

MAC Address: 00:50:56:6f:d0:15

Enabled: true

Portset: vSwitch2

Portgroup: vmk2-NFS

Netstack Instance: defaultTcpipStack

VDS Name: N/A

VDS UUID: N/A

VDS Port: N/A

VDS Connection: -1

Opaque Network ID: N/A

Opaque Network Type: N/A

External ID: N/A

MTU: 9000

TSO MSS: 65535

RXDispQueue Size: 4

Port ID: 167772315

[root@mgmt01:~] esxcli network ip netstack list defaultTcpipStack

Key: defaultTcpipStack

Name: defaultTcpipStack

State: 4660

[root@mgmt01:~] ping 10.10.10.62

PING 10.10.10.62 (10.10.10.62): 56 data bytes

64 bytes from 10.10.10.62: icmp_seq=0 ttl=64 time=0.219 ms

64 bytes from 10.10.10.62: icmp_seq=1 ttl=64 time=0.173 ms

64 bytes from 10.10.10.62: icmp_seq=2 ttl=64 time=0.174 ms

--- 10.10.10.62 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.173/0.189/0.219 ms

[root@mgmt01:~] ping 172.16.0.62

PING 172.16.0.62 (172.16.0.62): 56 data bytes

64 bytes from 172.16.0.62: icmp_seq=0 ttl=64 time=0.155 ms

64 bytes from 172.16.0.62: icmp_seq=1 ttl=64 time=0.141 ms

64 bytes from 172.16.0.62: icmp_seq=2 ttl=64 time=0.187 ms

--- 172.16.0.62 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.141/0.161/0.187 ms

root@mgmt01:~] vmkping -I vmk1 10.10.10.62

PING 10.10.10.62 (10.10.10.62): 56 data bytes

64 bytes from 10.10.10.62: icmp_seq=0 ttl=64 time=0.141 ms

64 bytes from 10.10.10.62: icmp_seq=1 ttl=64 time=0.981 ms

64 bytes from 10.10.10.62: icmp_seq=2 ttl=64 time=0.183 ms

--- 10.10.10.62 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.141/0.435/0.981 ms

[root@mgmt01:~] vmkping -I vmk2 172.16.0.62

PING 172.16.0.62 (172.16.0.62): 56 data bytes

64 bytes from 172.16.0.62: icmp_seq=0 ttl=64 time=0.131 ms

64 bytes from 172.16.0.62: icmp_seq=1 ttl=64 time=0.187 ms

64 bytes from 172.16.0.62: icmp_seq=2 ttl=64 time=0.190 ms

--- 172.16.0.62 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.131/0.169/0.190 ms

[root@mgmt01:~] esxcli network diag ping --netstack defaultTcpipStack -I vmk1 -H 10.10.10.62

Trace:

Received Bytes: 64

Host: 10.10.10.62

ICMP Seq: 0

TTL: 64

Round-trip Time: 139 us

Dup: false

Detail:

Received Bytes: 64

Host: 10.10.10.62

ICMP Seq: 1

TTL: 64

Round-trip Time: 180 us

Dup: false

Detail:

Received Bytes: 64

Host: 10.10.10.62

ICMP Seq: 2

TTL: 64

Round-trip Time: 148 us

Dup: false

Detail:

Summary:

Host Addr: 10.10.10.62

Transmitted: 3

Received: 3

Duplicated: 0

Packet Lost: 0

Round-trip Min: 139 us

Round-trip Avg: 155 us

Round-trip Max: 180 us

[root@mgmt01:~] esxcli network diag ping --netstack defaultTcpipStack -I vmk2 -H 172.16.0.62

Trace:

Received Bytes: 64

Host: 172.16.0.62

ICMP Seq: 0

TTL: 64

Round-trip Time: 182 us

Dup: false

Detail:

Received Bytes: 64

Host: 172.16.0.62

ICMP Seq: 1

TTL: 64

Round-trip Time: 136 us

Dup: false

Detail:

Received Bytes: 64

Host: 172.16.0.62

ICMP Seq: 2

TTL: 64

Round-trip Time: 213 us

Dup: false

Detail:

Summary:

Host Addr: 172.16.0.62

Transmitted: 3

Received: 3

Duplicated: 0

Packet Lost: 0

Round-trip Min: 136 us

Round-trip Avg: 177 us

Round-trip Max: 213 usWith these positive results, we can now mount the NFS-share by leveraging multiple vmk-based connections and validate we succeeded.

[root@mgmt01:~] esxcli storage nfs41 add --connections=8 --host-vmknic=10.10.10.62:vmk1,172.16.0.62:vmk2 --share=/fio-folder --volume-name=fio

[root@mgmt01:~] esxcli storage nfs41 list

Volume Name Host(s) Share Vmknics Accessible Mounted Connections Read-Only Security isPE Hardware Acceleration

----------- ----------------------- ----------- --------- ---------- ------- ----------- --------- -------- ----- ---------------------

fio 10.10.10.62,172.16.0.62 /fio-folder vmk1,vmk2 true true 8 false AUTH_SYS false Not Supported

[root@mgmt01:~] esxcli storage nfs41 param get -v all

Volume Name MaxQueueDepth MaxReadTransferSize MaxWriteTransferSize Vmknics Connections

----------- ------------- ------------------- -------------------- --------- -----------

fio 4294967295 261120 261120 vmk1,vmk2 8

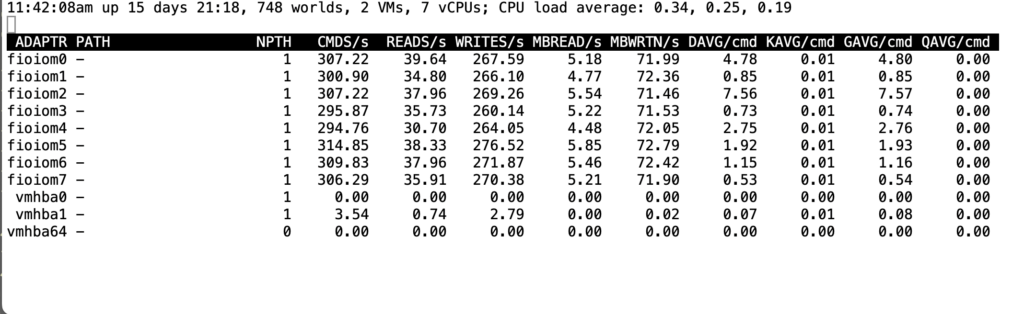

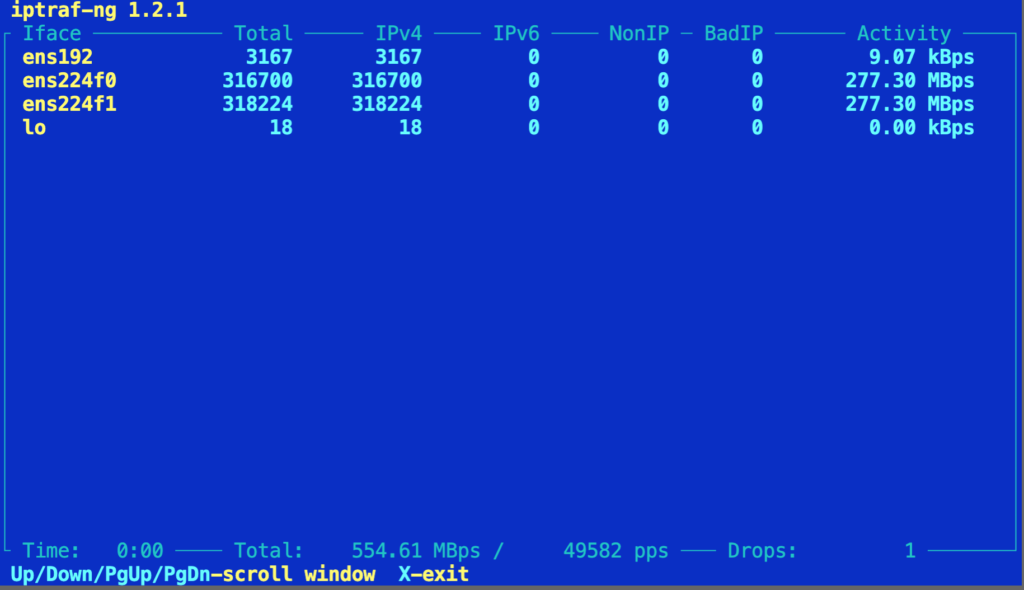

Finally, we validate both connections are actually used, disks are equally accessed and performance is what we hoped for (single VM SvMotion in this test). From NAS-server side I installed net-tools and iptraf-ng to create the below live-data screenshots. Esxtop is used to get insights on the flash-disk performance on the physical host.

root@openNAS:~# netstat | grep nfs

tcp 0 128 172.16.0.62:nfs 172.16.0.60:623 ESTABLISHED

tcp 0 128 172.16.0.62:nfs 172.16.0.60:617 ESTABLISHED

tcp 0 128 10.10.10.62:nfs 10.10.10.60:616 ESTABLISHED

tcp 0 128 172.16.0.62:nfs 172.16.0.60:621 ESTABLISHED

tcp 0 128 10.10.10.62:nfs 10.10.10.60:613 ESTABLISHED

tcp 0 128 172.16.0.62:nfs 172.16.0.60:620 ESTABLISHED

tcp 0 128 10.10.10.62:nfs 10.10.10.60:610 ESTABLISHED

tcp 0 128 10.10.10.62:nfs 10.10.10.60:611 ESTABLISHED

tcp 0 128 10.10.10.62:nfs 10.10.10.60:615 ESTABLISHED

tcp 0 128 172.16.0.62:nfs 172.16.0.60:619 ESTABLISHED

tcp 0 128 10.10.10.62:nfs 10.10.10.60:609 ESTABLISHED

tcp 0 128 10.10.10.62:nfs 10.10.10.60:614 ESTABLISHED

tcp 0 0 172.16.0.62:nfs 172.16.0.60:618 ESTABLISHED

tcp 0 0 172.16.0.62:nfs 172.16.0.60:622 ESTABLISHED

tcp 0 0 172.16.0.62:nfs 172.16.0.60:624 ESTABLISHED

tcp 0 0 10.10.10.62:nfs 10.10.10.60:612 ESTABLISHED

This concludes this blog on my specific NFS storage solution, where I learned :

- NFSv4.1 out performs NFSv3 by a factor 2

- XFS out performs EXT4 by a factor 3 (ZFS was tested also on TrueNAS and performed very well with sequential-IO)

- NFSv4.1-client in ESXi8.0+ cannot be linked to a dedicated/separate Netstack

- NFSv4.1 multi-connections based on dedicated vmkernel ports works very well

- Virtualized NAS-appliances show good performance but not all of them are stable (losing NFS-volumes, NFS “performance has deteriorated. I/O latency increased” issues)

3 Responses

-

Pingback: VMware good2know [CW02] – vmdaube

I’m not seeing where you show that NFSv4.1 outperforms NFSv3 by a factor of 2.

Did I miss a section where you compared the two?

Hi Joshua, the aim for this blog was not to compare NFS3 vs NFS4, and the performance difference was just an observation. My aim was to make use of all available nics and therefor NFS41 was the way to go. In the end VM-hosting performance is mainly determined by latency.